Guo, Yu (郭煜)

Graphics Researcher

tflsguoyu(at)gmail(dot)com

B.A

→

B.A

→

M.A

→

M.A

→

M.Phil

→

M.Phil

→

R.A

→

R.A

→

Ph.D

(

Ph.D

(

Intern

→

Intern

→

Intern

→

Intern

→

Intern

→

Intern

→

Intern)

→

Intern)

→

Researcher

→

Researcher

→

I was a senior researcher at Tencent Pixel Lab based in New York, US. I received my Ph.D in Computer Science from University of California, Irvine in 2021, supervised by ZHAO Shuang.

My interests include Computer Graphics and Vision, especially in material appearance modeling and physically based rendering.

Before starting Ph.D, I was a member of BeingThere Centre and worked on several research projects at Nanyang Technological University (Singapore) as a Research Associate.

I obtained my M.S in CS from Shenzhen Institute of Advanced Technology, Chinese Academy Sciences (Shenzhen, China), advised by HENG Pheng-Ann. And B.S in Mathematics from Central South University (Changsha, China).

PhD:

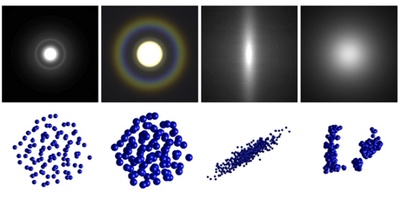

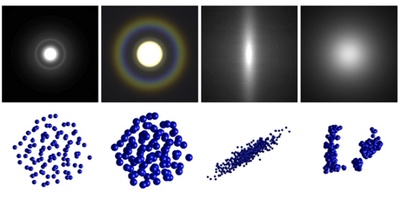

Beyond Mie Theory: Systematic Computation of Bulk Scattering Parameters based on Microphysical Wave Optics

Yu Guo, Adrian Jarabo, Shuang Zhao

ACM Transactions on Graphics, 2021

[abstract]

[paper]

[project page (including code)]

Light scattering in participating media and translucent materials is typically modeled using the radiative transfer theory. Under the assumption of independent scattering between particles, it utilizes several bulk scattering parameters to statistically characterize light-matter interactions at the macroscale. To calculate these parameters based on microscale material properties, the Lorenz-Mie theory has been considered the gold standard. In this paper, we present a generalized framework capable of systematically and rigorously computing bulk scattering parameters beyond the far-field assumption of Lorenz-Mie theory. Our technique accounts for microscale wave-optics effects such as diffraction and interference as well as interactions between nearby particles. Our framework is general, can be plugged in any renderer supporting Lorenz-Mie scattering, and allows arbitrary packing rates and particles correlation; we demonstrate this generality by computing bulk scattering parameters for a wide range of materials, including anisotropic and correlated media.

MaterialGAN: Reflectance Capture using a Generative SVBRDF Model

Yu Guo, Cameron Smith, Miloš Hašan, Kalyan Sunkavalli, Shuang Zhao

ACM Transactions on Graphics, 2020

[abstract]

[paper]

[project page (including code)]

We address the problem of reconstructing spatially-varying BRDFs from a small set of image measurements. This is a fundamentally under-constrained problem, and previous work has relied on using various regularization priors or on capturing many images to produce plausible results. In this work, we present MaterialGAN, a deep generative convolutional network based on StyleGAN2, trained to synthesize realistic SVBRDF parameter maps. We show that MaterialGAN can be used as a powerful material prior in an inverse rendering framework: we optimize in its latent representation to generate material maps that match the appearance of the captured images when rendered. We demonstrate this framework on the task of reconstructing SVBRDFs from images captured under flash illumination using a hand-held mobile phone. Our method succeeds in producing plausible material maps that accurately reproduce the target images, and outperforms previous state-of-the-art material capture methods in evaluations on both synthetic and real data. Furthermore, our GAN-based latent space allows for high-level semantic material editing operations such as generating material variations and material morphing.

A Bayesian Inference Framework for Procedural Material Parameter Estimation

Yu Guo, Miloš Hašan, Lingqi Yan, Shuang Zhao

Computer Graphics Forum, 2020

[abstract]

[paper]

[project page (including code)]

Procedural material models have been gaining traction in many applications thanks to their flexibility, compactness, and easy editability. In this paper, we explore the inverse rendering problem of procedural material parameter estimation from photographs using a Bayesian framework. We use summary functions for comparing unregistered images of a material under known lighting, and we explore both hand-designed and neural summary functions. In addition to estimating the parameters by optimization, we introduce a Bayesian inference approach using Hamiltonian Monte Carlo to sample the space of plausible material parameters, providing additional insight into the structure of the solution space. To demonstrate the effectiveness of our techniques, we fit procedural models of a range of materials---wall plaster, leather, wood, anisotropic brushed metals and metallic paints---to both synthetic and real target images.

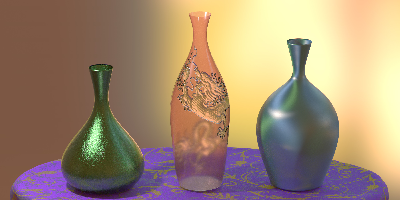

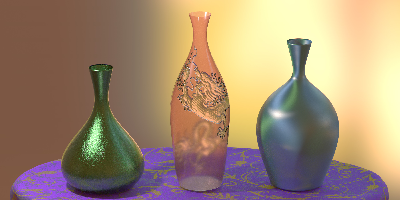

Position-Free Monte Carlo Simulation for Arbitrary Layered BSDFs

Yu Guo, Miloš Hašan, Shuang Zhao

ACM Transactions on Graphics, 2018

[abstract]

[paper]

[project page (including code)]

Real-world materials are often layered: metallic paints, biological tissues, and many more. Variation in the interface and volumetric scattering properties of the layers leads to a rich diversity of material appearances from anisotropic highlights to complex textures and relief patterns. However, simulating light-layer interactions is a challenging problem. Past analytical or numerical solutions either introduce several approximations and limitations, or rely on expensive operations on discretized BSDFs, preventing the ability to freely vary the layer properties spatially. We introduce a new unbiased layered BSDF model based on Monte Carlo simulation, whose only assumption is the layer assumption itself. Our novel position-free path formulation is fundamentally more powerful at constructing light transport paths than generic light transport algorithms applied to the special case of flat layers, since it is based on a product of solid angle instead of area measures, so does not contain the high-variance geometry terms needed in the standard formulation. We introduce two techniques for sampling the position-free path integral, a forward path tracer with next-event estimation and a full bidirectional estimator. We show a number of examples, featuring multiple layers with surface and volumetric scattering, surface and phase function anisotropy, and spatial variation in all parameters.

Thesis:

Multi-scale Appearance Modeling of Complex Materials

Ph.D.

August, 2021

[Paper]

[Slides]